You’re wrong – hackers are interested in your boring personal account, you are making it easy for them to get access, and it will likely end up being a bigger problem than you imagine.

Those are the stern words I want to use whenever I witness a friend doing the online equivalent of parking and leaving a stack of $100 bills on their car dashboard in a crime-ridden neighborhood. Instead I tend to suggest some easy steps to take to be more secure, which are almost invariably met with “it’s not a big deal”. I decided to write up my thoughts, so I can just point friends to this article and hopefully help others. This is absolutely not for altruistic reasons… I’ve had multiple experiences where somebody else’s bad online security habits resulted in nights and weekends of work for me and entire teams of people. I just want to sleep.

Hackers Want Your Stupid [insert lame service] Account

It seems absurd that your Lint Sculptures Discussion Forums password is of value to anybody… it’s just you and people you’ve met over the last 15 years that love to talk about dryer lint sculpting… security doesn’t matter. However, it was 15 years ago, so you chose a really lame password at the time (like “123456”), and now that an elite hacker has broken that code, they see your basic account details (your email, IP address, real name and city you live in). Again, who cares… that’s useless. Well, except you used the same password for everything back then, so with your email and password they can run a script to check 100,000 other sites and hey… looks like your genealogy, old photo sharing, and that antique Hotmail account you abandoned had the same password. Unfortunately, that banking thing you signed up for 12 years ago used that Hotmail address, and you forgot to unlink the Hotmail address from a few other accounts, including Paypal and LinkedIn. Now the hacker has the ability to access your LinkedIn account, change account credentials on your banking and possibly access accounts you don’t even remember you had. You can imagine how this gets problematic… the ability to send and receive from your email address typically provides the ability to get access to all other accounts, if by no other means than requesting a password reset. And this is just the annoying scenario where you have to deal with correcting identify theft on your own… at least you didn’t drag your friends down.

Instead the Hacker could exploit your Lint Sculptures Discussion Forums friends of 15 years. Does everybody need a direct message and 10,000 forum posts offering black market Viagra? No problem. Or how about a few messages to trusted friends to install this Lint Sculpting Simulation program… you know it doesn’t have a virus because your trusted friend of 15 years swears it’s great. Everybody wants to be part of a botnet, right? All of these acts may seem pointless to you, but hackers have a way of generating value (and money) from these pointless acts, and it isn’t much effort (a lot of it is automated), so it happens.

These scenarios may sound ridiculous, but two years ago I was contacted by a long-time friend that was traveling abroad and all of his possessions has been stolen, his family was stranded and he needed me to send money. What was true is he was traveling with family, the rest was made up by a hacker that got enough information to know I was a friend that would help, knew when the family was traveling, and when the story might make sense. Everything hackers needed to make this happen came from accessing worthless accounts.

Steps to Making Yourself More Secure

Security must be balanced with convenience. When being secure is a hassle, people naturally find (unfortunate) workarounds that make things less secure. If you require a password that is 20 characters long and random, look around the person’s desk for the PostIt (or possibly worse, in their “passwords.txt” file on their desktop). The sweet spot is a mild inconvenience that dramatically improves security. I find there’s a few easy practices that fit into this sweet spot…

Security must be balanced with convenience. When being secure is a hassle, people naturally find (unfortunate) workarounds that make things less secure. If you require a password that is 20 characters long and random, look around the person’s desk for the PostIt (or possibly worse, in their “passwords.txt” file on their desktop). The sweet spot is a mild inconvenience that dramatically improves security. I find there’s a few easy practices that fit into this sweet spot…

Two-factor Authentication

Systems that require two components to authenticate are substantially more secure than password-only systems. To access an account, it requires something you know (e.g. the password), and something you have, like a key. The “key” today is typically an application like Google Authenticator, or an SMS message with a code sent to your phone, both of which provide a unique code that is only valid for 1-5 minutes. Many services offer this, including Gmail, Facebook, Twitter, Dropbox, and a few banks (seriously banks, WTF?)

The beauty of Two-factor Authentication is, even if your password is breached, it doesn’t allow the hacker to access your account. So when you are are that hotel and using the guest computer with a key-logger to print your flight itinerary from your Gmail account, it doesn’t matter… the hacker only has 50% of what they need.

The inconvenience of adding Two-factor Authentication is typically an additional 20 seconds and, since many services allow you to say “remember me for 30 days”, it’s less than a minute a month (and… don’t use “remember me” on any shared machine).

Unique Passwords

If I told you I had every lock I use in my life (home, office, safety deposit box, cars, bike lock, vacation house) re-keyed to use the exact same key, you’d probably agree that it would be disproportionately bad if somebody found my bike key. When you apply this to online habits, people seem oddly comfortable with one key for almost everything, and a special key for their bank account (but online, weak keys often provide access to special keys).

Use a different (and strong) password for everything. This, of course, is a hassle… nobody can remember 150 different strong passwords, especially when you have to change them all every 3 weeks when you get the latest exploit notice from Yahoo!

One solution is to have a hard password that is modified in a way that you know for each service. As an example, my password is “nS72!la^mq” and I add the first four letters of the website it uses, in reverse… so for Yahoo! it becomes “nS72!la^mqohaY” and for Google it is “nS72!la^mqgooG”. This has a few flaws, including making it hard to change passwords, but it’s a substantial improvement over “swordfish” for everything.

A better solution is a password manager. Services like LastPass and Passpack provide a secure way for you to store and retrieve complicated passwords. Legitimate services encrypt your data in a way where they don’t actually know or even have access to your password, so a hacker that steals their database ends-up with a ton of encrypted files and no keys. While there are ways that could be exploited, these services are certainly better than any other options available at a consumer-level (and if you’re really paranoid, some make the source code available for you to keep the encrypted data only on your computer).

Whatever you do, never, ever, ever keep a password file on you computer, even if you think you’re clever by naming it “groceries.doc”.

Don’t Share Accounts

Sharing accounts invariably leads to other poor security practices, like the need to email everybody when a password changes or having a shared password file somewhere. And, when one of the people sharing your account gets hacked, this means the shared account gets hacked (and probably every other account in that shared password file so cleverly named “groceries.doc”)

This isn’t 1997 -these days there are very few reasons why each person can’t have their own credentials, especially for email. Only share accounts when separate accounts are not possible (I’m looking at you, Netflix). If you do need to share accounts, use a password manager that offers sharing of specific entries, which means that only the minimum exposure is shared and it is simple to update credentials (Passpack does this nicely).

Don’t Click Links

Okay, so the Interwebs sort of suck if you follow this rule exactly and dead-end on a website. However, for any site you are going to access and provide your credentials, enter the URL directly.

Did you just receive a weird email from PayPal telling you that Ned just paid you $42 for a lint sculpture you don’t remember selling? Instead of clicking on the “collect your money” link in the email, type “paypal.com” in your browser bar directly and see if the transaction is in your account history. Many phishing emails look and smell like the real thing because it is pretty simple to copy the real thing and send you to “paypaI.com” (see what I did there? that was a capital “i”, not an “l” in that URL) to steal your password. Of course, if you’re using Two-factor Authentication, a stolen password is less of a problem.

Secure Your Family

I used to get sick a couple of times a year… no big deal, just a sniffle every now and then. When I had kids, my health status flipped and it seemed like a couple of times a year I wasn’t infected with whatever was festering in the cesspool of Cheerios, finger paint, juice boxes and runny noses known as preschool.

My point is, there is almost certainly going to be an overlap of your family’s online account footprint, and when one person gets hacked it will likely be a vector for the rest of your family. Sharing documents in Dropbox, G Suite (Google Docs), or Amazon family all provide opportunities for a hack to spread. Protect your accounts by having those close to you keep their accounts secure (and… that is the real reason I wrote this post – pure selfishness as I protect my own accounts).

My point is, there is almost certainly going to be an overlap of your family’s online account footprint, and when one person gets hacked it will likely be a vector for the rest of your family. Sharing documents in Dropbox, G Suite (Google Docs), or Amazon family all provide opportunities for a hack to spread. Protect your accounts by having those close to you keep their accounts secure (and… that is the real reason I wrote this post – pure selfishness as I protect my own accounts).

Do you have other tips or suggestions to help make the average person more secure? Share them in the comments section!

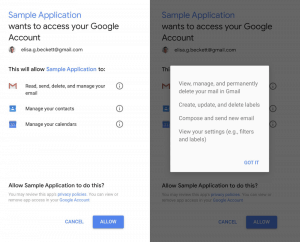

Some applications legitimately need elevated permissions to provide the service they offer, like inbox management, automatic scheduling, or even shopping deal comparisons. Many of these apps only access your data in the way necessary to provide the service, but there are many that take full advantage of access to your data and leverage your data for their benefit. According to articles on

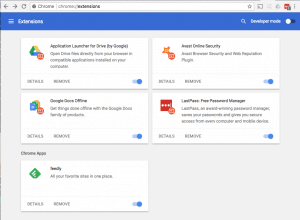

Some applications legitimately need elevated permissions to provide the service they offer, like inbox management, automatic scheduling, or even shopping deal comparisons. Many of these apps only access your data in the way necessary to provide the service, but there are many that take full advantage of access to your data and leverage your data for their benefit. According to articles on  In addition to granting companies access directly, web browser extensions can expose data from every website you visit. These Extensions in Chrome, and Add-Ons, Extensions, and Plugins in Firefox, provide enhanced functionality from password management to page translation, ad blocking, and simple video downloads. To provide these services, many extensions get access to everything you do in the browser. For example, a news feed reader has permission to “Read and change all your data on the websites you visit” – this means every page visited and all content on that page is accessible by the news reader extension… your web mail, your Facebook messages, your dating sites, medical issues you research… all available to some company that organizes news headlines for you.

In addition to granting companies access directly, web browser extensions can expose data from every website you visit. These Extensions in Chrome, and Add-Ons, Extensions, and Plugins in Firefox, provide enhanced functionality from password management to page translation, ad blocking, and simple video downloads. To provide these services, many extensions get access to everything you do in the browser. For example, a news feed reader has permission to “Read and change all your data on the websites you visit” – this means every page visited and all content on that page is accessible by the news reader extension… your web mail, your Facebook messages, your dating sites, medical issues you research… all available to some company that organizes news headlines for you. Installing applications and linked account creation on websites is simpler than ever. The downside to this ease of access is users typically spending little time scrutinizing the application. If you are giving access to your private data, spend the time to understand who is getting access, and how they will use your data. A simple web search for the application and “security” or “trust” can reveal what others experienced. If the company doesn’t have a website with the ability to contact them, and a published policy about handling your private data, there is a good chance securing your private data isn’t a real concern for them, and it should be for you!

Installing applications and linked account creation on websites is simpler than ever. The downside to this ease of access is users typically spending little time scrutinizing the application. If you are giving access to your private data, spend the time to understand who is getting access, and how they will use your data. A simple web search for the application and “security” or “trust” can reveal what others experienced. If the company doesn’t have a website with the ability to contact them, and a published policy about handling your private data, there is a good chance securing your private data isn’t a real concern for them, and it should be for you!

Within 24 hours of the breach I started receiving emails that threatened to release the customer data and publicly announce the breach if we didn’t pay a sum of money. My response to the blackmail was letting them know I would consider their proposal, but ultimately the damage they would do is to customers that didn’t deserve to be exploited, and to employees, good people that already feel a ton of weight from the responsibility. They gave me a few days to make a decision.

Within 24 hours of the breach I started receiving emails that threatened to release the customer data and publicly announce the breach if we didn’t pay a sum of money. My response to the blackmail was letting them know I would consider their proposal, but ultimately the damage they would do is to customers that didn’t deserve to be exploited, and to employees, good people that already feel a ton of weight from the responsibility. They gave me a few days to make a decision.

Okay, Brett… fine, ISPs can do this shifty stuff, but this sounds like tinfoil hat territory. Well, maybe, but

Okay, Brett… fine, ISPs can do this shifty stuff, but this sounds like tinfoil hat territory. Well, maybe, but  An ISP is closer to the phone company as a utility – while you may have some choice in which ISP you use, frequently these choices are very limited and, if selling private customer information is a standard practice, your only alternate choice is not having Internet access. If you found out that the phone company listened in on your conversations and sold transcripts to other companies, you’d likely be outraged.

An ISP is closer to the phone company as a utility – while you may have some choice in which ISP you use, frequently these choices are very limited and, if selling private customer information is a standard practice, your only alternate choice is not having Internet access. If you found out that the phone company listened in on your conversations and sold transcripts to other companies, you’d likely be outraged.

Security must be balanced with convenience. When being secure is a hassle, people naturally find (unfortunate) workarounds that make things less secure. If you require a password that is 20 characters long and random, look around the person’s desk for the PostIt (or possibly worse, in their “passwords.txt” file on their desktop). The sweet spot is a mild inconvenience that dramatically improves security. I find there’s a few easy practices that fit into this sweet spot…

Security must be balanced with convenience. When being secure is a hassle, people naturally find (unfortunate) workarounds that make things less secure. If you require a password that is 20 characters long and random, look around the person’s desk for the PostIt (or possibly worse, in their “passwords.txt” file on their desktop). The sweet spot is a mild inconvenience that dramatically improves security. I find there’s a few easy practices that fit into this sweet spot… My point is, there is almost certainly going to be an overlap of your family’s online account footprint, and when one person gets hacked it will likely be a vector for the rest of your family. Sharing documents in Dropbox, G Suite (Google Docs), or Amazon family all provide opportunities for a hack to spread. Protect your accounts by having those close to you keep their accounts secure (and… that is the real reason I wrote this post – pure selfishness as I protect my own accounts).

My point is, there is almost certainly going to be an overlap of your family’s online account footprint, and when one person gets hacked it will likely be a vector for the rest of your family. Sharing documents in Dropbox, G Suite (Google Docs), or Amazon family all provide opportunities for a hack to spread. Protect your accounts by having those close to you keep their accounts secure (and… that is the real reason I wrote this post – pure selfishness as I protect my own accounts).